DataStore Extension

Introduction

The DataStore permanent extension provides Object-Relational mapping functions to store and load data entities in a database.

The extension also provides a tool which allows the user to create and update the database scheme required for storing the entity information in the database.

Data Classes to SQL Schema Conversion

The DataStore extension creates a database table for each persistent Data Class.

Primitive values are stored in columns. Relations between Data Classes are stored in the dedicated relation table "DsRelation".

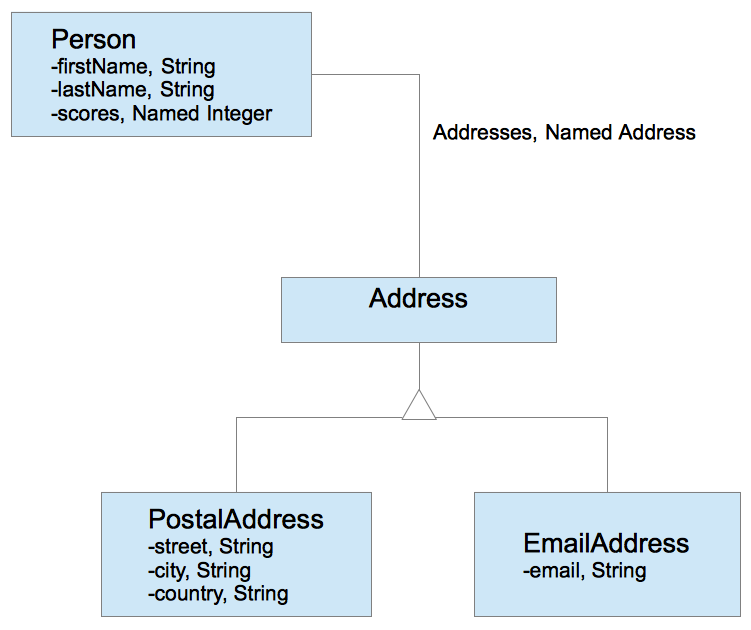

Consider the following data class structure:

A person has a firstname, lastname, a set of scores and a set of addresses.

There are two types of Addresses:

- The Postal Address has a street, a city and a country.

- The Email Address has a email.

The DataStore defines the following database schema for the above data classes:

-- table for Data Class Person

CREATE TABLE {SCHEMA}."DS_3U00tN7BSeu3wDfeArvJfQ" (

"awid" BIGINT PRIMARY KEY NOT NULL,

"firstName" VARCHAR(255),

"lastName" VARCHAR(255),

"scores" LONGTEXT

);

-- table for Data Class Address

CREATE TABLE {SCHEMA}."DS_QRBtlfpYTaStyB77tM7PlQ" (

"awid" BIGINT PRIMARY KEY NOT NULL

);

-- table for Data Class EmailAddress

CREATE TABLE {SCHEMA}."DS_f9pwUgK5RlyDu7ofzuafTw" (

"awid" BIGINT PRIMARY KEY NOT NULL,

"email" VARCHAR(255)

);

-- table for Data Class PostalAddress

CREATE TABLE {SCHEMA}."DS_Iq2KRrPTSKyJi34dbYlnAA" (

"awid" BIGINT PRIMARY KEY NOT NULL,

"city" VARCHAR(255),

"country" VARCHAR(255),

"street" VARCHAR(255)

);

Each class has a corresponding database table with a random UUID starting with the DS_ prefix. The table name can also be found in the metadata of a Data Class under the key “DataStore_tableName”. The table name remains unchanged even after renaming the ID of a Data Class.

Note: The classes mapped in versions prior to Appway 7 keep the original table name. For those classes, the metadata is added by the extension at startup and is equal to the class id. The DataStore extension performs the same process for data classes exported from versions prior to Appway 7 and imported in Appway 7 through an .AWEXPORT file.

All database tables have a primary key column called "awid".

- You cannot use the name "awid" for a Property inside a DataClass because this leads to a naming conflict in the tables of the DataStore. The DataStore already contains a column called "awid".

- The "awid" identifies a Data Entity managed by the DataStore and must be unique across all tables used by the DataStore.

Note: The attribute "Person.addresses" does not appear in the table definition for the Person class.This attribute defines a polimorphic relation between the Person and the Address class. A Person can be related to many different "Addresses" of runtime type "PostalAddress" or "EmailAddress". Relations between classes are stored in the "DsRelation" table.

-- DsRelation

CREATE TABLE {SCHEMA}."DsRelation"(

"sourceEntityId" NUMBER(38) NOT NULL,

"targetEntityId" NUMBER(38) NOT NULL,

"sourceType" varchar2(255) NOT NULL,

"targetType" varchar2(255) NOT NULL,

"name" varchar2(255) NOT NULL,

"key" varchar2(255) NULL

)

The DsRelation table is holding instance relation information. The semantics of the columns are defined here:

- sourceEntityId — the "awid" of the relation source

- targetEntityId — the "awid" of the relation target

- sourceType — the table name of the source entity

- targetType — the table name of the target entity

- name — the name of the relation property as defined in the Data Class

- key — the name or index of the value in case of a collection relation

Primitive Types

The following type mappings exist between FNZ Studio's primitive types and database types:

| FNZ Studio type | MySQL DB type | MSSQL DB type | Oracle DB type |

| Boolean | VARCHAR(5) ['false', 'true' | VARCHAR(5) ['false', 'true'] | VARCHAR2(5) ['false', 'true'] |

| Date Timestamp (Milliseconds since January 1, 1970) | BIGINT | BIGINT | NUMBER(38) |

| Double | DOUBLE | FLOAT | NUMBER |

| Float (deprecated, use Double instead) | DOUBLE | FLOAT | NUMBER |

|

Integer |

BIGINT | BIGINT | NUMBER(38) |

| Long | BIGINT | BIGINT | NUMBER(38) |

| String (<LEN >=10,20,50,100,255) | VARCHAR(<LEN>) | VARCHAR(<LEN>) | VARCHAR2(<LEN>) |

| String (<LEN>=4096) | MEDIUMTEXT | NVARCHAR(max) | CLOB |

| String (<;LEN>=max) | LONGTEXT | NVARCHAR(max | CLOB |

| Indexed or Named Collections of primitives | LONGTEXT | NVARCHAR(max) | CLOB |

Collection Types

Collections of primitive types are stored to CLOB columns.

Collection entries are separated using the ASCII character \U001E Key and value in Named Collection entries are separated using the ASCII character \U001F

| FNZ Studio representation | Database representation |

Indexed Boolean $maybe := [false, true]:Boolean

|

false\u001etrue

|

Named Boolean $maybe := {'foo'=false, 'bar'=true}:Any

|

foo\u001ffalse\u001ebar\u001ftrue

|

Insert / Update

The script function SaveDataEntity must be used for inserting a new record or updating an existing record.

// create person and addresses

Person $p := new Person;

$p.firstName := 'Jack';

$p.lastName := 'Tramiel';

$p.scores['Space Invaders'] := 1023;

$p.scores['Jounglehunt'] := 21544;

PostalAddress $adr1 := new PostalAddress;

$adr1.street := '123 Atari Plaza';

$adr1.city := 'New York';

$adr1.country := 'USA';

$p.addresses['home'] := $adr1;

EmailAddress $adr2 := new EmailAddress;

$adr2.email := 'jack.tramiel@atari.com';

$p.addresses['email'] := $adr2;

// save to database

SaveDataEntity($p, 'mysql', true);

If a new structure is saved, an insert operation is executed. If a previously saved entity is saved again, an update operation is executed.

The result of the SaveDataEntity call is the "awid" of the root entity that was saved.

Load

An entity can be loaded using its "awid".

// load the Person with "awid" == 1364375067065

LoadDataEntity(1364375067065, Person, 'mysql', true);

Delete

An entity can be deleted with the DeleteDataEntity function.

// $p has previously been saved or loaded from the data store

// recursively delete $p and all related entities

DeleteDataEntity($p, 'mysql', true);

Table Name Selection

The table name of a DataClass can be obtained using the GetDataStoreTableName function.

// ‘Person’ is a data class previously mapped to the database

GetDataStoreTableName(‘Person’);

Similarly, the GetDataStoreHistoryTableName function returns the name of the history table for a data class (see section Overview of UI Elements for more details).

Both GetDataStoreTableName and GetDataStoreHistoryTableName can be used with a specific version filter, e.g.

GetDataStoreTableName(‘Person’, ‘LatesCommittedFilter’);

Selection

Selection of a Data Entity requires building a query which returns the entity's "awid". The "awid" is then used for loading the entity. Queries must use quoted table and column names.

A query which uses primitive attributes of an entity is straight forward.

A query must use the functions described in Table Name Selection to resolve the table name. While DataClasses mapped with an older version of DataStore (i.e. up to version 3.2.3) can use the DataClass name adopter before the upgrade to the new DataStore release, we strongly recommend to always use the aformentioned function to avoid issues with renamings.

In the following example we query for the person that has been inserted in the "Insert / Update" section.

SqlQuery returns an Indexed Collection of Named Collections. Selected columns are accessible via nested inner collection by column name.

Person $p;

// define the query

String $query := 'SELECT \"awid\" FROM \"' & GetDataStoreTableName(Person) & '\" WHERE \"lastName\"=?';

// execute the query using the given indexed query parameter collection

Indexed Any $rows := SqlQuery('mysql', $query, ['Tramiel']:Any);

// loop through the results

Named Any $row;

ForEach $row In $rows Do

// get the "awid"

Long $awid := TOLONG($row['awid']);

// load the person

$p := LoadDataEntity($awid, Person, 'mysql', true);

PRINTLN($p);

End

Queries can use related instances.

In the following example a query is built which selects persons which have a home address in the USA.

For this query the "DsRelation" table is used.

// define the query

String $query := 'SELECT p.\"awid\" FROM \"' & GetDataStoreTableName(Person) & '\" p, ' & GetDataStoreTableName(PostalAddress) & ' a, \"DsRelation\" r WHERE ';

$query := $query & 'a.\"country\"=? AND a.\"awid\"=r.\"targetEntityId\" AND p.\"awid\"=r.\"sourceEntityId\"';

// execute the query using the given indexed query parameter collection

Indexed Any $rows := SqlQuery('mysql', $query, ['USA']:Any);

// loop through the results

Named Any $row;

ForEach $row In $rows Do

// get the "awid"

Long $awid := TOLONG($row['awid']);

// load the person

Person $p := LoadDataEntity($awid, Person, 'mysql', true);

End

The query above joins the "PostalAddress" and the "Person" via the "DsRelation" table.

The query uses the fact that at the moment only one PostalAddress is associated with a person.

The more verbose query where the relation name and key are specified also works:

// define the query

String $query := 'SELECT p.\"awid\" FROM \"Person\" p, \"PostalAddress\" a, \"DsRelation\" r WHERE ';

$query := $query & 'a.\"country\"=? AND a.\"awid\"=r.\"targetEntityId\" AND p.\"awid\"=r.\"sourceEntityId\" ';

$query := $query & ' AND r.\"name\" = ? AND r.\"key\"=?';

// execute the query using the given indexed query parameter collection

Indexed Any $rows := SqlQuery('mysql', $query, ['USA', 'addresses', 'home']:Any);

Load Data Entities

An indexed collection of entities of the same type can be loaded using the "LoadDataEntities" function.

The function allows a user to specify what exactly will be loaded from the database, this is done with the third parameter SQL query.

// define the query to load everything

String $personTableName := GetDataStoreTableName(Person);

String $query := 'SELECT * FROM \"'& $personTableName &'\" WHERE lastName=?';

Indexed Person $persons := LoadDataEntities('mysql', Person, $query, ['Tramiel']:String);

// only load the "name" value.

$query := 'SELECT \"awid\",\"name\" FROM \"'& $personTableName &'\" WHERE lastName=?';

// ATTENTION: "awid" must be selected otherwise records cannot be loaded!

$persons := LoadDataEntities('mysql', Person, $query, ['Tramiel']:String);

Here are the parameters:

- datasource — Name of the data source

- type — Value type of the collection

- query — SQL query which must select all columns that should be mapped to the created entities. The "awid" MUST be part of these columns. If the entity will be saved again it is recommended to load "ALL" column in order not to lose data.

- attributes — Attributes of the query for the sql statements

- recursive — Load entities and child entities (default is false, do not load recursive)

- limit — Maximum number of elements to return (0, all)

- firstElement — Index of the first element to be loaded (0, first element)

- lastElement — Index of the last element to be loaded (0, last element)

- depth — Depth for loading child entities (-1 all, 1 only first level children, ...), the recursive attribute decides if recursive loading must be applied, depth decides the maximum depth of recursion

Controlling the range of IDs

Starting from DataStore extension version 3.0.18, you can control the range of technical IDs ("awids") that are generated by the DataStore extension and used to identify entities in the database. This feature supports the use case where multiple DataStore extensions (from different FNZ Studio environments) need to write data to the same DataStore tables. Each DataStore "instance" can have its own range of IDs that will be generated to avoid ID duplication and overlapping in the tables. The ID range to be used by the DataStore can be specified using the two extension configuration properties:

- id.range.start

- If id.range.start is specified, the DataStore extension generates IDs that are higher than id.range.start.

- id.range.max

- If id.range.max is specified, the DataStore extension will NOT generate IDs that are higher than id.range.max.

Allowed Procedures for Handling Data Classes

The data classes mapped to the database embed metadata that must be unique across the whole application. Following is a list of allowed procedures to handle the cloning, undeleting and import of data classes.

- ID RENAME: The class id can be renamed safely. The table name is independent of the class id and is maintained after id renaming.

- DUPLICATE: The duplication operation is safe. The DataStore removes the metadata related to the extension from the clone class. Therefore, the clone data class is not mapped to the database, while the cloned data class keeps the previous mapping.

- MOVE: Moving a class between two different Packages is safe. Consider that moving a data class from Package S to Package D results in the deletion of the class in Package S. Therefore, undeleting the class from Package S causes the duplication of the metadata, including the table name. This can be fixed by removing the “DB” and “DataStore_tableName” metadata from one of the two classes. In order to avoid this manual update, and in case you need to keep the class in Package S, first clone the class in Package S, and then move the cloned class in Package D.

- IMPORT: This operation is unsafe due to possible conflicts with existing metadata.

- .awexport IMPORT: In case of missing metadata for the table name, the extension adds it to the data classes with “DB” metadata set to true. Be aware that this may cause the duplication of table names whenever:

- a data class in Studio and a data class in the .awexport file exist with the same metadata “DataStore_tableName”.

- a data class in Studio has the same metadata “DataStore_tableName” as the class id of a data class in the .awexport file which has metadata “DB” set to true and no “DataStore_tableName” metadata. Both conflicting cases need to be solved manually by fixing the metadata content.

- .awdeployment IMPORT: Importing data classes through an .awdeployment file does not perform any automatic update of the metadata. All the importing issues described for the .awexport file also apply to this case.

- .awexport IMPORT: In case of missing metadata for the table name, the extension adds it to the data classes with “DB” metadata set to true. Be aware that this may cause the duplication of table names whenever:

DataStore UI

Prerequisites

Be sure to use the extension version compatible with your FNZ Studio installation.

Supported Databases are:

- MySQL

- Microsoft SQL Server

- DB2

- Oracle Server

- H2 Database

For a list of supported databases, see the Technical Requirements article.

Data Store Administration Tool

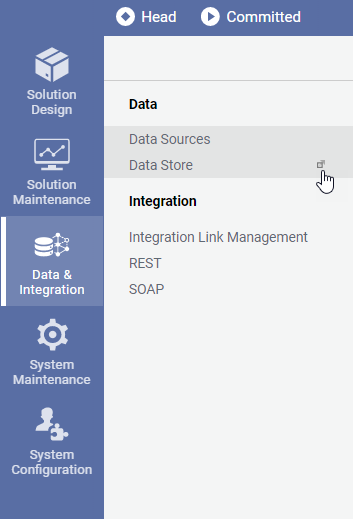

In Studio, enter the Data Store administration tool through Data&Integration > Data > Data Store .

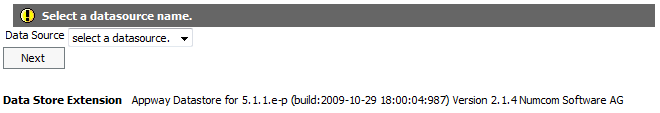

Data Source Selection

Select the data source in the dropdown element. The selected data source is used as storage target of the subsequent operations.

Overview

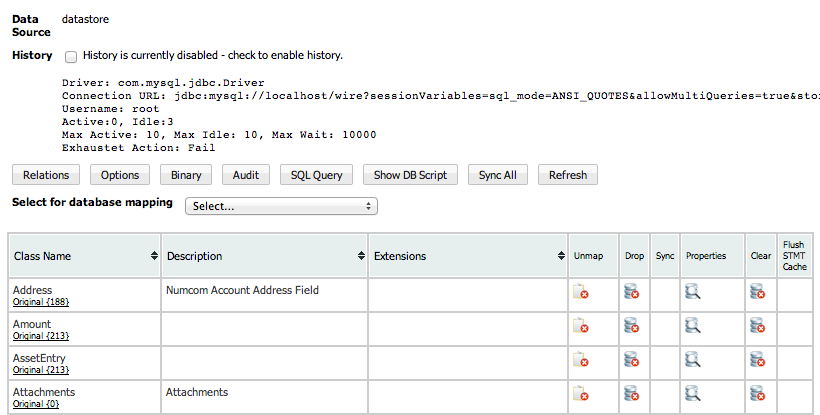

The initial overview displays the above interface. The elements of this interface are described below.

Overview of UI Elements

- Data Source — The name of the selected data source.

- History — Indicates whether automatic historization is available.

- Select for database mappings — Defines the data class to be mapped to the database.

- Table of mapped classes — Displays all mapped data classes.

- Back button — Returns you to the data source selection

- Buttons — Open views for specific tables used for data store operations:

- Relations: Shows the first ten results of the DsRelation table, which displays the relations between data class entities. For more information and examples on the DsRelation table, see: Selection.

- Options: Shows the first ten results of the DsOption table, which displays the data store options.

- Binary: Shows the first ten results of the DSBinaryData table, which contains a list of the binary files uploaded in the database. Binary files can be uploaded by using, for example, an

UploadBinaryData($fileName, $fileExtension, $filePath, $dataSourceName)script function. - Audit: Shows the first ten results of the DsAudit table, which is used for auditing the persistent storage functions INSERT, UPDATE, DELETE performed on the data class entities (READ is excluded). For each entity (‘awid’ column), the table displays information on what kind of operation has been performed (‘op’ column), when (‘timestamp’ column) and by whom (‘userId’ column).

As the DsAudit table can become very big and hinder performance, you can disable the Audit button by setting the `nm.datastore.audit` configuration property to `false`. Consider that disabling this feature does not remove the data from the table, but it can improve auditing performance impact.

* SQL Query: Allows executing an SQL query.

* Show DB Script: Displays the SQL for all the tables and triggers used to bind the mapped data classes to the database (the content can change depending on whether the History check box is selected or not). Consider that the reported SQL depends on the chosen data source dialect (for example, Oracle or MS SQL).

* Sync All: Synchronizes all mapped data classes and creates the corresponding table. If the History check box is selected, history tables and triggers are also created.

* Refresh: Refreshes the current page.

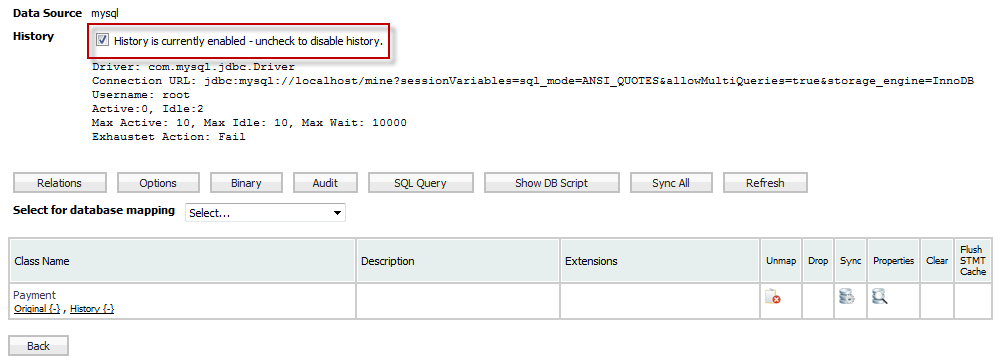

Mapped Classes Table

- Class Name – States the name of the mapped class. In smaller font the number of "Original" (current) entities and the number of "History" (old) entity-versions are indicated.

- Description – Displays the description from the Dataclass definition in FNZ Studio Composition.

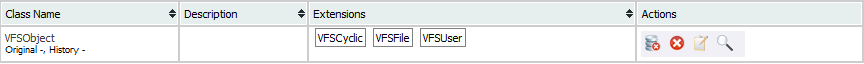

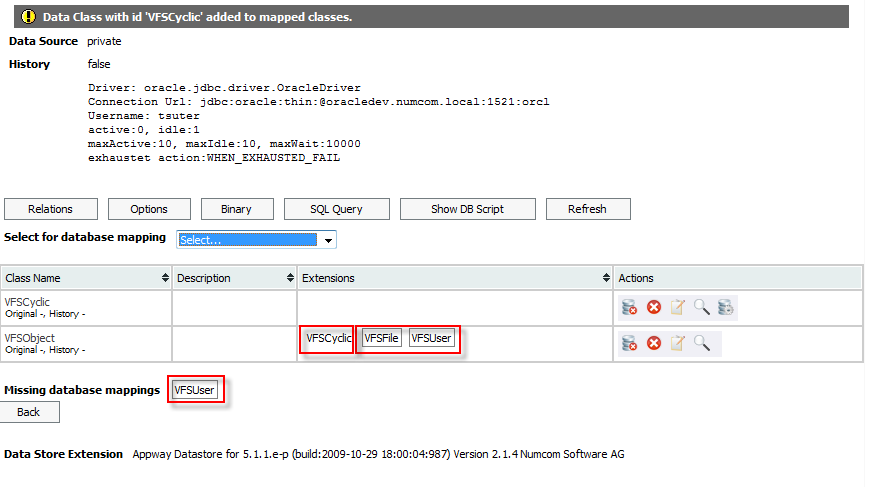

- Extensions – A list of classes which extend the current class. Some names might have a border which indicates that the class is not yet mapped to the database. Click on these class names to add the corresponding class to the mapped classes.

- Actions – Contains actions that can be executed on the row. Actions are: "drop database tables for the class", "remove class from mapped classes", "define attribute sizes", "view table values", and "synchronize table with class definition".

Dropping database tables may affect running Processes which rely on data previously persisted for the unmapped Data Class. Therefore, you are recommended to unmap only the Data Classes and not to drop the corresponding table.

After adding some classes to the mapped classes table we might encounter the following view:

Here we find two classes mapped to the database. "VFSCyclic" and "VFSObject". The "Missing database mappings" list classes which are referenced by the already mapped classes but are itself nat mapped. Clicking the class name will add the class to the mapped classes.

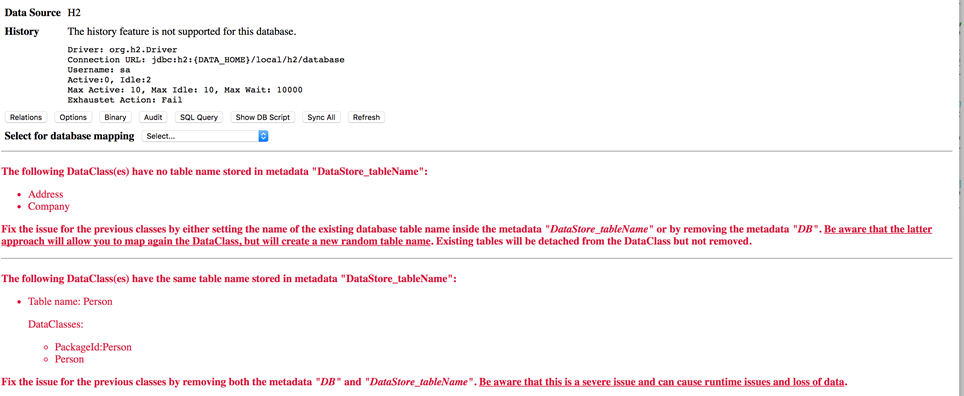

Error Reporting for Corrupted Metadata

An error message is shown in case the metadata of the mapped data classes has accidentally been corrupted.

The previous message appears whenever a data class is mapped to the database and either:

- The “DataStore_tableName” metadata is not present or empty.

- Two data classes share the same table name, from “DataStore_tableName” metadata. A missing table name can be fixed by either adding the missing metadata or by removing the “DB” Metadata. The first solution implies that the table name is unique. The second solution allows remapping the data class, but generates a new random table name (see section Data Classes to SQL Schema Conversion). Therefore, consider that any table already mapping the data class is detached but not removed from the database schema. Two classes may have the same table name when they have been duplicated following a forbidden procedure (see section Allowed Procedures for Handling Data Classes). This issue can be fixed by removing the metadata from one of the two classes.

NOTE: The errors are also reported by the DataStore extension sensor. The sensor runs every minute to validate data classes mapped by the extension. The sensor can be triggered manually under System Maintenance > System Overview > Health by right-clicking on the DataStore extension sensor and selecting the Query Sensor button.

Data Versioning

User Interface

The DataStore extension provides a data history feature. This feature can be enabled and disabled with the global history switch in the data store overview.

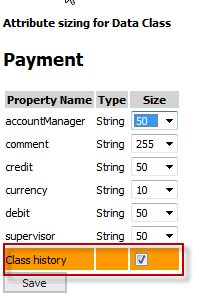

If the feature is enabled the user may decide to enable or disable history on a per table basis. The switch can be found in the Properties detail view.

Background

The data history feature creates an additional database table per class which contains the history of the data. The name of a history table follows the naming pattern "DataClass Table Name"+"AWHST".

In addition to the "AWHST" table, three triggers for INSERT, UPDATE and DELETE are created. Because of these triggers the history function is FNZ Studio independent. That means all data modifications on the database trigger history entries. Not only data modifications executed by FNZ Studio.

Each history entry contains the complete data record which consists of modified and unmodified columns of the record.

Lets assume we have a Payment defined as followed:

DataClass Payment Begin

Property[amount, Double, None];

Property[tax, Double, None];

Property[supervisor, String, None];

Property[debit, String, None];

Property[comment, String, None];

Property[credit, String, None];

Property[accountManager, String, None];

Property[currency, String, None];

End

If we modify the Payment amount and save the payment to the database, the main "Payment" table is updated. Because we executed an UPDATE query, the trigger - defined for the update operation - is executed. The definition of that trigger copies the NEW record into the AWHST table of the Payment. In addition to the data class property column we find the following columns in a history table:

| Name | |Type | Description |

| awdate | DATETIME | timestamp when the operation was executed |

| awid | BIGINT | id of the record that was modified |

| awop | VARCHAR(6) | operation that was executed INSERT, UPDATE or DELETE |

Users have to take care not to use these names in their class definitions!

Note: Currently relations between data classes are not included in the versioning feature.That is: if there is a relation R between class A and B and the relation is modified that modification will not be versioned.

MySQL Database Statement Example

The DataStore extension create database dependent table and trigger definitions. The data class is defined as:

DataClass Payment Begin

Property[amount, Double, None];

Property[tax, Double, None];

Property[supervisor, String, None];

Property[debit, String, None];

Property[comment, String, None];

Property[credit, String, None];

Property[accountManager, String, None];

Property[currency, String, None];

End

Will be mapped to the following database table:

-- table for Data Class Payment

CREATE TABLE {SCHEMA}."DS_wGTLTNFTQj6evq0pYGaaSg" (

"awid" BIGINT PRIMARY KEY NOT NULL,

"accountManager" VARCHAR(255),

"amount" DOUBLE,

"comment" VARCHAR(255),

"credit" VARCHAR(255),

"currency" VARCHAR(255),

"debit" VARCHAR(255),

"supervisor" VARCHAR(255),

"tax" DOUBLE

);

The history table definition is:

-- history table for Data Class Payment

CREATE TABLE {SCHEMA}."DS_wGTLTNFTQj6evq0pYGaaSgAWHST" (

"awdate" DATETIME NOT NULL,

"awid" BIGINT NOT NULL,

"awop" VARCHAR(6),

"accountManager" VARCHAR(255),

"amount" DOUBLE,

"comment" VARCHAR(255),

"credit" VARCHAR(255),

"currency" VARCHAR(255),

"debit" VARCHAR(255),

"supervisor" VARCHAR(255),

"tax" DOUBLE

);

The triggers for INSERT, UPDATE and DELETE are defined as follows:

-- history trigger(s) for Data Class Payment

DROP TRIGGER IF EXISTS {SCHEMA}.AWHSTDS_wGTLTNFTQj6evq0pYGaaSgINSERT

DROP TRIGGER IF EXISTS {SCHEMA}.AWHSTDS_wGTLTNFTQj6evq0pYGaaSgUPDATE

DROP TRIGGER IF EXISTS {SCHEMA}.AWHTDS_wGTLTNFTQj6evq0pYGaaSgINSERT

DROP TRIGGER IF EXISTS {SCHEMA}.AWHTDS_wGTLTNFTQj6evq0pYGaaSgUPDATE

CREATE TRIGGER {SCHEMA}.AWHSTDS_wGTLTNFTQj6evq0pYGaaSgINSERT AFTER INSERT ON {SCHEMA}."DS_wGTLTNFTQj6evq0pYGaaSg"

FOR EACH ROW BEGIN

INSERT INTO {SCHEMA}."DS_wGTLTNFTQj6evq0pYGaaSgAWHST"("awdate","awid","awop","accountManager","amount","comment","credit","currency","debit","supervisor","tax") VALUES (NOW(),NEW.awid,'INSERT',NEW.accountManager,NEW.amount,NEW.comment,NEW.credit,NEW.currency,NEW.debit,NEW.supervisor,NEW.tax);

END

CREATE TRIGGER {SCHEMA}.AWHSTDS_wGTLTNFTQj6evq0pYGaaSgUPDATE AFTER UPDATE ON {SCHEMA}."DS_wGTLTNFTQj6evq0pYGaaSg"

FOR EACH ROW BEGIN

INSERT INTO {SCHEMA}."DS_wGTLTNFTQj6evq0pYGaaSgAWHST"("awdate","awid","awop","accountManager","amount","comment","credit","currency","debit","supervisor","tax") VALUES (NOW(),NEW.awid,'UPDATE',NEW.accountManager,NEW.amount,NEW.comment,NEW.credit,NEW.currency,NEW.debit,NEW.supervisor,NEW.tax);

END

DROP TRIGGER IF EXISTS {SCHEMA}.TDS_WGTLTNFTQJ6EVQ0PYGAASG_DR

CREATE TRIGGER {SCHEMA}.TDS_WGTLTNFTQJ6EVQ0PYGAASG_DR

AFTER DELETE ON {SCHEMA}."DS_wGTLTNFTQj6evq0pYGaaSg"

FOR EACH ROW BEGIN

DECLARE cnt INTEGER;

DELETE FROM {SCHEMA}."DsRelation" WHERE "sourceEntityId"=OLD."awid" OR "targetEntityId"=OLD."awid";

SELECT count(TABLE_NAME) INTO @cnt FROM information_schema.TABLES WHERE TABLE_NAME='" + tableName + "AWHST' AND TABLE_CATALOG=DATABASE();

IF (@cnt=1) THEN

INSERT INTO {SCHEMA}."DS_wGTLTNFTQj6evq0pYGaaSgAWHST"("awdate","awid","awop","accountManager","amount","comment","credit","currency","debit","supervisor","tax") VALUES (NOW(),OLD.awid,'DELETE',OLD.accountManager,OLD.amount,OLD.comment,OLD.credit,OLD.currency,OLD.debit,OLD.supervisor,OLD.tax);

END IF;

END

Code Example: Database SQL script generated by FNZ Studio DataStore extension

Troubleshooting

LoadDataEntity Function

When you load data using the LoadDataEntity function of the DataStore Extension, you sometimes have null elements in indexed collections.

Null elements in indexed collections appear for one of two reasons:

- Some objects in the collection were deleted by calling the

DeleteDataEntityfunction - Some elements were added to the collection with a specified index greater than the current size of the collection+1.

In the first scenario, references to an object were not removed before the object was deleted.

In the second scenario, adding elements led to the auto-expansion of the collection. This caused all positions in between to be filled with nulls.

Example: A user calls $p.addresses.addElement(102, $a) although the current size of the collection is only 100. In doing this the user stretches the collection, and null appears in the index at position 101.

1. Dealing with Objects to be Deleted

Remove all the references to an object you want to delete by calling .removeElement(DataEntity) on each collection where this element appears. Do this before deleting the object itself.

Example: You have a given data class, Person, and another data class, Address. Person has an indexed collection of Addresses as a property.

If you want to remove one address from a given collection and completely erase it from the DataStore, follow these steps:

- Load the address from the DataStore with the following code snippet:

Copy

Person $p := LoadDataEntity(20002, Person, 'mysql', true, 1);

Address $a := LoadDataEntity(20003, Address, 'mysql', true, 1); - Remove the address from the collection:

Copy

$p.addresses.removeElement($a);

SaveDataEntity($p, 'mysql', true); - Remove the address from existence (erase from DataStore):

Copy

DeleteDataEntity($a, 'mysql', true);

2. Removing Unnecessary Nulls

In case you already have a collection with nulls and you want to get rid of them, use the "FILTER" function:

Person $p := LoadDataEntity(20002, Person, 'mysql', true, 1);

$p.addresses := FILTER($p.addresses, $a != null, $a);

SaveDataEntity($p, 'mysql', true);

Data Store Size

You need to estimate the database size requirements for your data in a data model. The data is persisted via the DataStore Extension in a database.

To solve this issue, you need to have a rough idea of the cardinalities (1..n) of the relationships: roughly how large "n" is. If you cannot estimate this figure, then a default value of 5 is used.

Implement the following method:

Note: The `$ps` variable is for the size of each object, and its units are in bytes

/**

Calculate the database space needed for one instance of the given data class.

@param $dataClassId the id of the data class of which the size requirement must be calculated

@param $cardinalities the cardinality of complex properties, default value 5

@return the size requirement of the given data class.

*/

Function CalculateDatabaseSpace(BusinessObject $bo, Named Integer $cardinalities := {}:Integer) : Integer Begin

Integer $size := 0;

Integer $ps;

Integer $pm;

String $t;

String $c;

com.nm.sdk.data.dataitems.DataProperty $dp;

com.nm.sdk.data.dataitems.DataStructure $class := CAST(com.nm.sdk.data.dataitems.DataStructure, $bo);

ForEach $dp In $class.getProperties() Do

$t := $dp.getType();

$c := $dp.getCollectionType();

$ps := 0;

//

If $dp.getCollectionType() == 'none' Then

$pm := 1;

Else

$pm := TOINTEGER($cardinalities\[$dp.getName()]);

$pm := IF($pm == 0, 5, $pm);

End

If $c != 'none' and CONTAINS('String|Double|Float|Real|Integer|Long|Date|DateTime', $t) Then

// todo estimate size of CLOB

$pm := 1;

$ps := 1024;

ElseIf $t == 'String' Then

$ps := TOINTEGER($class.getMetaData().getProperty('property\_' & $dp.getName()));

$ps := IF($ps == 0, 255, $ps);

ElseIf $t == 'Boolean' Then

$ps := 5;

ElseIf CONTAINS('Double|Float|Real|Integer|Long|Date|DateTime', $t) Then

$ps := 17;

Else

// max size of a DsRelation entry

$ps := 1054;

End

//

$size := $size + $pm \* $ps;

//

PRINTLN(' -\> ' & $dp & ' : ' & $pm & ', ' & $ps);

End

Return $size;

End

//

BusinessObject $bo;

Indexed BusinessObject $bos := FILTER(GETBUSINESSOBJECTS('Data Class', VersionFilter('HeadFilter')), $bo.getMetaData().getProperty('DB') == 'true', $bo);

ForEach $bo In $bos Do

PRINTLN($bo.getId(), ', requires ' & CalculateDatabaseSpace($bo) & 'bytes');

End

Essentially, this method uses reflection to retrieve the type information for the properties, and uses the field lengths stored in metadata in each data class to determine their size. Then, based upon the cardinalities of the collection objects, it tries to do a rough calculation of the size of an object, including its DsRelation records that connect it to its children.

It then cycles through all the data classes and prints out the sizes for a single instance of each. If you know roughly how many instances you are going to have of a particular data class (i.e. what the volumes of transactions, clients, products, etc. are going to be), you can then multiply these values by your calculated object sizes to determine roughly how much database storage is required.

Cardinalities and Calculations

Why does the size of class A not consider the inner cardinalities of Class B and so on?

The class B is not stored in class A but a reference is made between the two in an auxiliary table. The cardinality is used to calculate the size of these references, as well as the size of the column data for a single entry/row within the table for Entity A.

The function is not navigating a tree of objects because the cardinalities passed to it are limited to the current level. What it is instead doing is calculating the size of the simple types and associated references.

Otherwise the calculation could easily be incorrect because you may have multiple Class A instances referencing the same Class B instance. If both A1 and A2 were to share B1, it would be very very difficult to estimate.

As Class A has its data table and Class B has its data table, both can be calculated using the estimate for a single level and its relative relation/reference entries and then multiplied by the expected number of records of each type and summed.

For example: You may expect there to be 5000 Class A instances.

Class A primitive data and related references may be calculated and let's say the value is: 2635.

You may expect there to be 10,000 Class B instance (Some shared and some not). Class B primitive data and related references may be calculated and let's say the value is: 5287.

Again, the same thing for C: let's say 10,000 instances with size 5950

So you would now sum these ((5000 * 2635) + (10000 * 5287) + (10000 * 5950)) / (1024 * 1024) = Total size in MB Roughly 4.9 MB

GDPR Compliance

The General Data Protection Regulation (GDPR) is an EU regulation aimed at protecting the privacy of natural persons regarding the processing of personal data and the free movement of such data. The purpose of GDPR, among others, is to strengthen and to unify data protection laws within the EU for individuals, ensuring individuals have control of their personal data.

Important! The DataStore extension stores data and as such it can be used to persist information on data subjects (natural persons). Implementing a data persistence strategy that is compliant with the regulations stipulated by GDPR must be guaranteed by the implementation of the Solution by the customer or entity using this product. Compliance is not inherent in any way to the product itself.

This document and the content thereof and any information derived from it is not to be construed as legal, regulatory and/or tax advice. FNZ Studio is not permitted to and will not provide any legal, regulatory and/or tax advice. FNZ Studio is also not providing an interpretation of any laws or regulations that may be applicable to any customer, prospect, or anyone, and they shall be responsible for clarifying and stipulating the legal and regulatory requirements applicable to its business. While FNZ Studio personnel providing the services may, through experience or specialized training or both, be familiar with the general regulatory environment in their capacity as information-technology and management-consulting professionals, they will work under the direction of the customer and its legal counsels regarding the specific legal and regulatory requirements under which the customer operates. The compliance of the services and work results with the applicable laws and requirements remains the sole responsibility of customer and their legal, regulatory and/or tax advisors.